Breadcrumb

How do neurons in the brain ensure we see both the forest and the trees?

New research by the Alessandra Angelucci Laboratory at the John A. Moran Eye Center at the University of Utah offers insight into how the brain combines visual information from parts of a scene into one larger scene.

Published in Neuron on October 10, the study examines neurons in the primary visual cortex, known as the V1 area of the brain. This part of the brain receives and processes information from the nerves in the eye.

Seeing the Bigger Picture

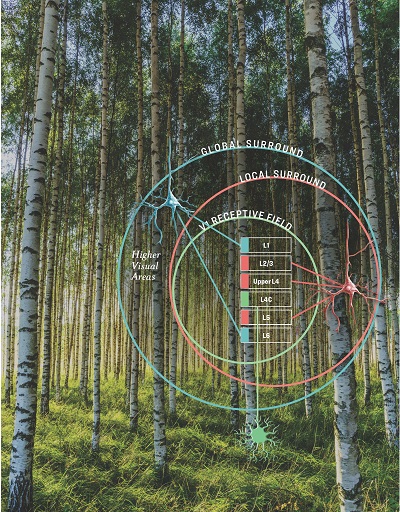

A V1 neuron only processes a tiny region of a visual scene, its receptive field. It might see, for example, only a tiny part of the bark on an Aspen tree.

"Information through this small window is ambiguous," said Angelucci, MD, PhD, in a video explaining the research. "So the V1 neuron cannot figure out which object it is seeing. To understand what it is seeing, the neuron needs to integrate spatial information from outside its receptive field, that is in the surround of the receptive field."

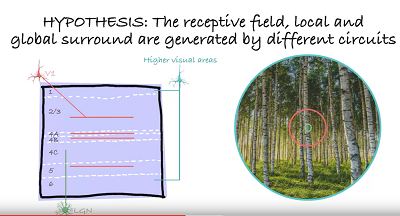

The study examined two regions outside the V1 neuron receptive field: the "local surround" near the receptive field (also known as the "near" surround); and the "global surround" farther away from the receptive field (the "far" surround).

By adding information from the near surround, the V1 neuron can determine that the bark was part of a tree; by adding information from the far surround, the V1 neuron can determine that the tree is in a grove of other Aspens.

"In the study, we asked, how do V1 neurons integrate local and global visual information from outside their receptive field?" explained Angelucci.

Area V1 consists of six stacked layers, with each layer receiving distinct connections from different parts of the brain.

The team recorded the electrophysiological responses of V1 neurons simultaneoulsy through the six layers, while presenting visual stimuli of increasing size. The stimuli were covering either the receptive field, the near surround or the far surround.

The team found that visual stimuli of different size activate different layers of V1, indicating that different brain connections are involved in the processing of local (near) and global (far) stimuli in a visual scene, such as that Aspen grove.

Mapping the Human Brain

The findings are part of the National Institutes of Health (NIH) BRAIN Initiative to revolutionize our understanding of the human brain by mapping how individual cells and complex neural circuits interact.

This knowledge is essential to understand how vision occurs in the brain and how it might someday be reproduced through a prosthetic implant—the only option for irreparable damage caused by conditions such as retinal degenerations, glaucoma, and eye traumas.

The study was funded by the NIH, the National Science Foundation, Research to Prevent Blindness, and the University of Utah Research Foundation and Neuroscience Initiative, and U neuroscience graduate program and postdoctoral fellowships.

Study authors also included Maryam Bijanzadeh; Lauri Nurminen, Sam Merlin, and Andrew M. Clark.

In a different study published in June in Nature Communications, Angelucci and her team also explored the function of feedback connections in the brain’s visual cortex that are believed to be involved in visual attention, expectation, and visual context.